Author:

Author:

Mentor:

Abstract:

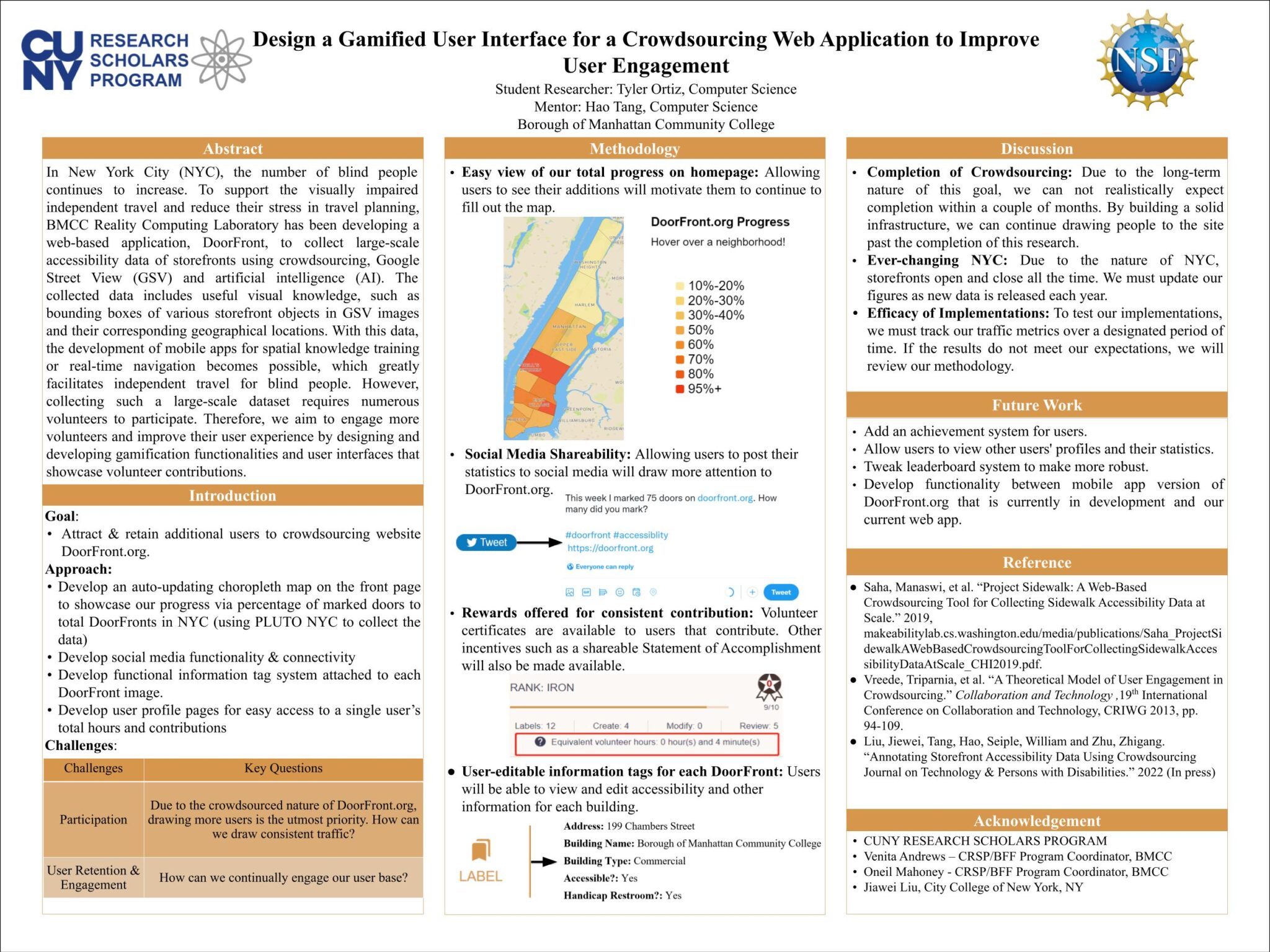

In New York City (NYC), the number of blind people continues to increase. To support the visually impaired independent travel and reduce their stress in travel planning, BMCC Reality Computing Laboratory has been developing a web-based application, DoorFront, to collect large-scale accessibility data of (NYC) storefronts using crowdsourcing, Google Street View (GSV) and artificial intelligence (AI). The collected data includes useful visual knowledge, such as bounding boxes of various storefront objects in GSV images and their corresponding geographical locations. With this data, the development of mobile apps for spatial knowledge training or real-time navigation becomes possible, which greatly facilitates independent travel for blind people. However, collecting such a large-scale dataset requires numerous volunteers to participate. Therefore, we aim to engage more volunteers and improve their user experience by designing and developing gamification functionalities and user interfaces that showcase volunteer contributions. We anticipate seeing that more and more volunteers engage and dedicate more time to help us collect accessibility information for NYC storefronts.