Author: Jiawei Liu, Jing Huo

Mentor: Hao Tang

Institution: BMCC

Abstract:

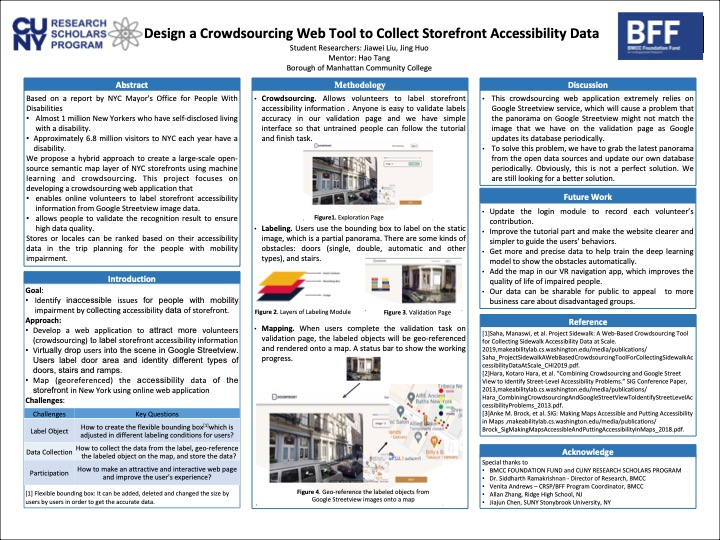

Based on a report by NYC MOPD, there are almost 1 million New Yorkers who have self-disclosed living with a disability or roughly 11.2% of the city’s population. MOPD also estimates that approximately 6.8 million visitors to our city each year have a disability. We propose a solution to create a large-scale open-source semantic map layer of NYC sidewalks and storefronts using a hybrid approach of machine learning and crowdsourcing, with close community engagement. In this project, we focus on developing a crowdsourcing web application that enables online crowd-workers as well as volunteers to remotely help label image data. Moreover, the proposed web app allows people to validate the recognition result to ensure high data quality. Users can virtually walk through New York city streets in Google Street View and select an image of a certain storefront to verify. All labeled data can be used to improve the accuracy of the image recognition model.

To increase users’ engagement, we apply basic game principles such as mission-based tasks and progress bars. As of now, we mainly focus on the differences in behavioral and labeling quality among labelers and improving the user experience to reduce the common labeling errors.

BFF-Poster-Jenna-HUO.jpg

Katherine Conway

I am sure this is much needed by the differently abled community. One question – are there different types of accessibility issues? (Beyond types of doors, ramps etc.) Are the issues different for the blind versus someone in a wheelchair versus someone in a motorized scooter? Do those inputting the information have guidelines?